SIGTYP LECTURE SERIES

Next Event

Summer/Autumn 2021 Calendar

Olga Zamaraeva: Typologically-driven Modeling of wh-Questions in a Grammar Engineering Framework

Studying language typology and studying syntactic structure formally are both ways to learn about the range of variation in human languages. These two ways are often pursued separately from each other. Furthermore, assembling the complex and fragmented hypotheses about different syntactic phenomena along multiple typological dimensions becomes intractable without computational aid. In response to these issues, the Grammar Matrix grammar engineering framework combines typology and syntactic theory within a computational paradigm. As such, it offers a robust scaffolding for testing linguistic hypotheses in interaction and with respect to a clear area of applicability. In this talk, I will present my recent work on modeling the syntactic structure of constituent (wh-)questions in a typologically attested range, within the Grammar Matrix framework. The presented system of syntactic analyses is associated with grammar artifacts that can parse and generate sentences, which allowed me to rigorously test the analyses on test suites from diverse languages. The grammars can be extended directly in the future to cover more phenomena and more lexical items. Generally, the Grammar Matrix framework is intended to create implemented grammars for many languages of the world, particularly for endangered languages. In computational linguistics, formalized syntactic representations produced by such grammars play a crucial role in creating annotations which are then used for evaluating NLP system performance and which could be used for augmenting training data as well, in low-resource settings. Such grammars were also shown to be useful in applications such as grammar coaching, and advancing this line of research can contribute to educational and revitalization efforts. The talk comprises 4 parts (one hour in total), there will be Q&A sessions after each: 1) Introduction (focusing on NLP and language variation) 2) Computational syntax with HPSG 3) Assembling typologically diverse analyses 4) Future directions of research

Jon Rawski: Typology Emerges from Computability

Typology, from the ancient Sanskrit grammarians through to Alexander von Humboldt, is known to require two databases: an "encyclopedia of categories" and an "encyclopedia of types". The mathematical study of computable functions gives a rich encyclopedia of categories, and processes in natural language a rich encyclopedia of types. This talk will connect the two, especially in morphology and phonology. Jon will: 1) overview classes of string-to-string functions (polyregular, regular, rational and subsequential); 2) use them to determine the scope and limits of linguistic processes; 3) analytically connect them to classes of transducers (and acceptors using algebraic semirings); 4) show their usefulness for Seq2Seq interpretability experiments, and implications for ML in NLP generally.

Tiago Pimentel: An Informative Exploration of the Lexicon

During my PhD I've been exploring the lexicon through the lens of information theory. In this talk, I'll give an overview on results detailing the distribution of information in words (are initial or final positions more informative?), and cross-linguistic compensations (if a language has more information per character, are their words shorter?). I'll also present two new information-theoretic operationalisations (of systematicity and lexical ambiguity) which allow us to analyse computational linguistics question through corpus analyses -- relying only on natural (unsupervised) data.

Maria Ryskina: Informal Romanization Across Languages and Scripts

Informal romanization is an idiosyncratic way of typing non-Latin-script languages in Latin alphabet, commonly used in online communication. Although the character substitution choices vary between users, they are typically grounded in shared notions of visual and phonetic similarity between characters. In this talk, I will focus on the task of converting such romanized text into its native orthography and present experimental results for Russian, Arabic, and Kannada, highlighting the differences specific to writing systems. I will also show how similarity-encoding inductive bias helps in the absence of parallel data, present comparative error analysis for unsupervised finite-state and seq2seq models for this task, and explore how the combinations of the two model classes can leverage their different strengths.

Shruti Rijhwani: Cross-Lingual Entity Linking for Low-Resource Languages

Entity linking is the task of associating a named entity with its corresponding entry in a structured knowledge base (such as Wikipedia or Freebase). While entity linking systems for languages such as English and Spanish are well-developed, the performance of these methods on low-resource languages is significantly worse.

In this talk, I first discuss existing methods for cross-lingual entity linking and the associated challenges of adapting them to low-resource languages. Then, I present a suite of methods developed for entity linking that do not rely on resources in the target language. The success of our proposed methods is demonstrated with experiments on multiple languages, including extremely low-resource languages such as Tigrinya, Oromo, and Lao. Additionally, this talk will show how information from entity linking can be used with state-of-the-art neural models to improve low-resource named entity recognition.

David Inman: Conceptual Interdependence in Language Description, Typology, and NLP: Examples from Nuuchahnulth

The fields of language description, typology, and NLP can be and typically are pursued independently. However, approaching these from a perspective of interdependence reveals that methodologies in one can often answer or refine questions in another. Focusing on the example of coordination structures in Nuuchahnulth, a Wakashan language of British Columbia, I will walk through the connection among traditional linguistic fields and NLP, how these can inform each other, and why NLP researchers should be interested.

Bio:

David Inman's research is centered on Indigenous American languages, their linguistic properties, history, and typological profile. His doctoral research utilized computational tools to document properties of Nuuchahnulth, a Wakashan language spoken in Canada, and he continues investigating the challenges to syntactic theory that this language presents. At the University of Zurich, he is developing typological questionnaires targeting areal patterns in the Americas, and investigating how these overlap to produce areas of historically intense linguistic contact.

Tuhin Chakrabarty: NeuroSymbolic methods for creative text generation

Recent neural models have led to important progress in natural language generation (NLG) tasks. While pre-trained models have facilitated advances in many areas of text generation, the fields of creative language generation especially figurative language are relatively unexplored. There are important challenges that need to be addressed such as the lack of a large amount of training data as well as the inherent need for common sense and connotative knowledge required for modeling these tasks. In this talk, I will present some of my recent work on neurosymbolic methods for controllable creative text generation focusing on various types of figurative language (e.g. metaphor, simile, sarcasm). Additionally, I will discuss how we can borrow from theoretically grounded concepts of figurative language and use these inductive biases to make our generations closer to humans.

Sabrina Mielke: Fair Comparisons for Generative Language Models -- with a bit of Information Theory

How can we fairly compare the performance of generative models on multiple languages? We will see how to use probabilistic and information theory-based measures, first to evaluate (monolingual) open-vocabulary language models by total bits and then pondering the meaning of “information” and how to use it to compare machine translation models. In both cases, we get only a little glimpse at what might make languages easier or harder for models, but deviating from the polished conference talk, I will recount how I spent half a year on a super-fancy model that yielded essentially the same conclusions as a simple averaging step... The rest of the talk will be dedicated to work on actually building new open-vocabulary language models, and on evaluating and ameliorating such models' gender bias in morphologically rich languages.

Bio:

Sabrina is a PhD student at the Johns Hopkins University and a part-time research intern at HuggingFace, researching open-vocabulary language models for segmentation and tokenization. She has published and co-organized workshops and shared tasks on these topics as well as on morphology and typological analysis in ACL, NAACL, EMNLP, LREC, and AAAI. You can find her reminisce for a time when formal language theory played a bigger role in NLP on Twitter at @sjmielke.

Richard Futrell: Investigating Information-Theoretic Influences on the Order of Elements in Natural Language

Why is human language the way it is? I claim that human languages can be modeled as codes that maximize information transfer subject to constraints on the process of language production and comprehension. I use this efficiency-based framework to formulate quantitative theories of the order of words, phrases, and morphemes, aiming to explain the typological universals documented by linguists as well as the statistical distribution of orders in massively cross-linguistic corpus studies. I present results about Greenbergian word order correlations, adjective order in English, and the order of verbal dependents in Hindi.

Bio:

Richard Futrell is an Assistant Professor in the Department of Language Science at the University of California, Irvine. His research focuses on language processing in humans and machines.

Eleanor Chodroff: Structure in Cross-linguistic Phonetic Realization

A central goal of linguistic study is to understand the range and limits of cross-linguistic variation. Cross-linguistic phonetic variation is no exception to this pursuit: previous research has provided some insight into expected universal tendencies, but access to relevant and large-scale speech data has only recently become feasible. In this talk, I focus on structure in cross-linguistic phonetic variation that may reflect a universal tendency for uniformity in the phonetic realisation of a shared feature. I present case studies from cross-talker variation within a language, and then insight from cross-linguistic meta-analyses and larger-scale corpus studies.

Bio:

Eleanor Chodroff is a Lecturer in Phonetics and Phonology at the University of York. She received her PhD in Cognitive Science from Johns Hopkins University in 2017 and did a post-doc at Northwestern University in Linguistics working on speech prosody. Her research focuses on the phonetics–phonology interface, cross-talker and cross-linguistic phonetic variation, speech prosody, and speech perception.

Amit Moryossef: Including Signed Languages in NLP

Signed languages are the primary means of communication for many deaf and hard-of-hearing individuals. Since signed languages exhibit all the fundamental linguistic properties of natural language, I believe that tools and theories of Natural Language Processing (NLP) are crucial to its modeling. However, existing research in Sign Language Processing (SLP) seldom attempts to explore and leverage the linguistic organization of signed languages. In this talk, I discuss the linguistic properties of signed languages, the current open questions and challenges in modeling them, and present my current research to mitigate them.

Duygu Ataman: Machine Translation of Morphologically-Rich Languages: a Survey and Open Challenges

Morphologically-rich languages challenge neural machine translation (NMT) models with extremely sparse vocabularies where atomic treatment of surface forms is unrealistic. This problem is typically addressed by either pre-processing words into subword units or performing translation directly at the level of characters. The former is based on word segmentation algorithms optimized using corpus-level statistics with no regard to the translation task. The latter approach has shown significant benefits for translating morphologically-rich languages, although practical applications are still limited due to increased requirements in terms of model capacity. In this talk, we present an overview of recent approaches to NMT developed for translating morphologically-rich languages and open challenges related to their future deployment.

Bio:

Duygu Ataman holds a bachelor's and a master's degree in electrical engineering and computer science from Middle East Technical University, and KU Leuven, respectively. She completed her Ph.D. in computer science in 2019 at the University of Trento under the supervision of Marcello Federico. In her doctoral research she studied unsupervised learning of morphology, from the aspects of linguistics, cognitive science and statistics, and designed a purely statistical formulation of it within the Bayesian framework, which could be implemented in decoders of neural machine translation models in order to generate better translations in morphologically-rich languages. During her Ph.D. she was also a visiting student at the School of Informatics, University of Edinburgh advised by Dr. Alexandra Birch, and an applied scientist intern at Amazon Alexa Research. After recently completing her post-doctoral research and studies at the Institute of Computational Linguistics, University of Zürich she will soon join New York University's Courant Institute as an assistant professor and faculty fellow.

Ekaterina Vylomova: UniMorph and Morphological Inflection Task: Past, Present, and Future

In the 1960s, Hockett proposed a set of essential properties that are unique to human language such as displacement, productivity, duality of patterning, and learnability. Regardless of the language we use, these features allow us to produce new utterances and infer their meanings. Still, languages differ in the way they express meanings, or as Jacobson put it, “Languages differ essentially in what they must convey and not in what they may convey”. From a typological point of view, it is crucial to describe and understand the limits of cross-linguistic variation. In this talk, I will focus on cross-lingual annotation and regularities in inflectional morphology. More specifically, I will discuss the UniMorph project, an attempt to create a universal (cross-lingual) annotation schema, with morphosyntactic features that would occupy an intermediate position between the descriptive categories and comparative concepts. UniMorph allows an inflected word from any language to be defined by its lexical meaning, typically carried by the lemma, and a bundle of universal morphological features defined by the schema. Since 2016, the UniMorph database has been gradually developed and updated with new languages, and SIGMORPHON shared tasks served as a platform to compare computational models of inflectional morphology. During 2016–2021, the shared tasks made it possible to explore the data-driven systems’ ability to learn declension and conjugation paradigms as well as to evaluate how well they generalize across typologically diverse languages. It is especially important, since elaboration of formal techniques of cross-language generalization and prediction of universal entities across related languages should provide a new potential to the modeling of under-resourced and endangered languages. In the second part of the talk, I will outline certain challenges we faced while converting the language-specific features into UniMorph (such as case compounding). In addition, I will also discuss typical errors made by the majority of the systems, e.g. incorrectly predicted instances due to allomorphy, form variation, misspelled words, looping effects. Finally, I will provide case studies for Russian, Tibetan, and Nen.

Bio:

Ekaterina Vylomova is a Lecturer and a Postdoctoral Fellow at the University of Melbourne. Her research is focused on compositionality modelling for morphology, models of inflectional and derivational morphology, linguistic typology, diachronic language models, and neural machine translation. She co-organized SIGTYP 2019 – 2021 workshops and shared tasks and the SIGMORPHON 2017 – 2021 shared tasks on morphological reinflection.

Adina Williams: How Strongly does Grammatical Gender Correlate with the Lexical Semantics of Nouns?

Since at least Ferdinand de Saussure, linguists have aimed to understand the strength and substance of the relationship between word meaning and word form. In this talk, I present several works that explore one particular aspect of this long standing research program: grammatical gender. In particular, this presentation asks the following question: is there a statistically significant relationship between the morphological gender of a noun and its lexical meaning? I will present three recent studies that answer this question in the affirmative. These works measure the strength of the correlation between grammatical gender and several operationalizations of lexical meaning (using collocations and word embeddings). They also explore the relationship between meaning and orthographic form, uncovering related correlations for other grammatical systems (such as declension class). These works highlight how technical advancements in multilingual NLP tools and increasing availability of large text corpora can shed light on some of the most enduring questions about the nature of language.

Bio:

Adina is a Research Scientist at Facebook AI Research in NYC. Her main research goal is to strengthen connections between linguistics, cognitive science, and natural language processing. Towards that end, she brings insights about human language to bear on training, evaluating, and debiasing ML-based NLP systems, and applies tools from NLP to uncover new facts about human language.

Kyle Gorman: On "Massively Multilingual" Natural Language Processing

Early work in speech & language processing was critiqued for an overwhelming focus on English (and a few other regionally hegemonic languages). In part, this reflected resource limitations of the time. In the first half of this talk, I will discuss various ways in which speech & language processing technologies can be said to be "monolingual" or "multilingual". I will identify several distinct tendencies pushing the field towards greater multilinguality and note some tensions between these various tendencies. In the second half of the talk I will discuss some of the work out of my lab exploiting free, massively multilingual data extracted from Wiktionary, a free online dictionary. These resources include UniMorph, a collection of morphological paradigms, and WikiPron, a collection of pronunciation dictionaries. I will discuss how these data are collected and vetted, and their use in a series of recent shared tasks hosted by special interest groups of the Association for Computational Linguistics.

Kyle Mahowald: “Deep” Subjecthood: Classifying Grammatical Subjects and Objects across Languages

What do contextual embedding models know about grammatical subjects and objects, and how does that knowledge vary typologically? To explore that question, I will present a variety of results, probing both humans and machines using a bespoke subject/object classification task. In the first part of the talk, I will show that type-level embeddings can explain a large part of the variance in whether a given noun is a subject, but that there are cases in which contextual models play a crucial role. In the second part of the talk, I explore subject/object classification in Multilingual BERT on both transitive and intransitive sentences, across languages that vary in morphosyntactic alignment. In particular, I explore how a classifier trained on transitive subjects and objects classifies held-out intransitive subjects, comparing the model performance within and across nominative/accusative and ergative/absolutive languages. I consider the implications of these results for linguistic theories of subjecthood.

Kayo Yin: Understanding, Improving and Evaluating Context Usage in Context-aware Machine Translation

Context-aware Neural Machine Translation (NMT) models have been proposed to perform document-level translation, where certain words require information from the previous sentences to be translated accurately. However, these models are unable to use context adequately and often fail to translate relatively simple discourse phenomena. In this talk, I will discuss methods to measure context usage in NMT by using human annotations and conditional cross mutual information, as well as training methods to improve context usage by supervising attention and performing contextual word dropout. I will also discuss ways to identify words that require context to translate and how to evaluate NMT models on these ambiguous phenomena, and present open challenges in document-level translation.

Bio:

Kayo Yin is a 2nd year Master's student at Carnegie Mellon University advised by Prof. Graham Neubig. Her research focuses on developing machine translation models that can break down communication barriers between different language users while ensuring everyone can benefit language technologies in their preferred language. She is working on identifying and resolving translation ambiguities that arise in document-level translation, as well as developing NLP models that can process signed languages.

Tanja Samardžić: Language Sampling

Whenever we perform an experiment to test a model or a hypothesis, we need to decide what data to include. In NLP and linguistics, this means selecting a number of languages and a number of examples (sounds, words, utterances) from each language. How do we take this decision? In theory, this decision is part of the study design: we should select a proper sample to represent our target population. In practice, however, our decisions tend to be driven by many different factors such as data availability, our familiarity with a language, ease of processing, but also factors such as political views and ethical concerns. While there is a growing awareness of biases potentially introduced by such factors, designing proper samples remains an open challenge. In this talk, I will review the most common data sampling criteria in NLP and linguistic, discuss several methods for dealing with biases (e.g. maximising diversity, controlling for phylogenetic dependence) and propose a few improvements for future work.

Bio:

Tanja a computational linguist with a background in language theory and machine learning, currently a lecturer (Privatdozentin) and a group leader at the University of Zurich. Her research is about developing computational text processing methods and using them to test theoretical hypotheses on how language actually works. She holds a PhD in Computational linguistics from the University of Geneva, where she studied in the group Computational Learning and Computational Linguistics (CLCL). She is committed to promoting and facilitating the use of computational approaches in the study of language.

Antonia Karamolegkou and Sara Stymne: Transfer Language Choice for Cross-Lingual Dependency Parsing

Lately there has been an increasing amount of work on cross-lingual learning, and how models for a target language, often with few resources, can be improved by using data from other languages. In this talk we will focus on cross-lingual dependency parsing, where the Universal Dependency treebanks serves as a great test bed, containing harmonized annotations for a diverse set of languages. Specifically, we focus on the case where we focus on specific target languages, and especially on how to choose good transfer languages. We explore the impact of a number of language similarity features on this choice, including geneological, geographic and syntactic similarity, and also the impact of different text types and training set size. We present three studies on different aspects. In our first study we focus on Latin, and compare transfer languages from the Hellenic and Italian families. In our second study we investigate transfer language choice for a more diverse set of target and transfer languages. In our third study we investigate the performance when targeting speech and Twitter data.

Bio:

Antonia Karamolegkou is a postgraduate student in computational linguistics. She received her master's degree from Uppsala University with a thesis on argument mining. She is currently working as a software engineer in an Informatics and Technology company.

Sara Stymne is assistant professor in computational linguistics at Uppsala University. She received her PhD from Linköping University with a thesis on machine translation. Her current main research focus is on cross-lingual methods for dependency parsing, with a special interest in the impact of the domain of training data.

Mathias Müller: Exploring a Sampling-based Alternative to Beam Search

In this talk I will discuss the potential of Minimum Bayes Risk (MBR) decoding -- a sampling-based decoding algorithm -- to replace beam search in machine translation.

Beam search is the de-facto standard decoding algorithm for many language generation problems. However, recent work has found that beam search itself causes or exacerbates well-known biases in machine translation. Minimum Bayes Risk (MBR) decoding was suggested as an alternative algorithm that does not search for the highest-scoring translation but operates on a pool of samples.

I will highlight that MBR does not alleviate well-known biases in machine translation, but, interestingly, increases the robustness to noise in the training data and to domain shift.

Bio:

Mathias is a post-doc and lecturer at the University of Zurich. His current main interests are 1) the meta-sciences of scientific integrity, methodology and reproducibility applied to machine translation, 2) decoding algorithms and 3) sign language translation. In his personal life he is a father of two and a passionate musician.

Please find more information about him here: cl.uzh.ch/mmueller

Antonis Anastasopoulos: Extracting Linguistic Information from Text

In this talk I'll synthesize several works from a series of papers that focus on extracting elements of a descriptive grammar of a language directly from text. I'll focus on morphosyntactic rules, as well as on identifying interesting semantic subdivisions. I'll also talk about how these "rules" can be used for the evaluation of natural language generation systems, as well as potentially aid language learners.

Bio:

Antonios Anastasopoulos is an Assistant Professor in Computer Science at George Mason University. He received his PhD in Computer Science from the University of Notre Dame, advised by David Chiang and then did a postdoc at Languages Technologies Institute at Carnegie Mellon University. His research is on natural language processing with a focus on low-resource settings, endangered languages, and cross-lingual learning, and is currently funded by the National Science Foundation, the National Endowment for the Humanities, Google, Amazon, and the Virginia Research Investment Fund.

Shauli Ravfogel: Linear Information Removal Methods

I will present Iterative Nullspace Projection (INLP), a method to identify subspaces within the representation space of neural LMs that correspond to arbitrary human-interpretable concepts such as gender or syntactic function. The method is data-driven and identifies those subspaces by the training of multiple orthogonal classifiers to predict the concept at focus. I will overview some recent work of ours, which demonstrates the utility of these concept subspaces for different goals: mitigating social bias in static and contextualized embeddings and assessing the influence of concepts on the model's behavior. I will then describe an ongoing work which studies the theoretical aspects of this method, especially with regard to its optimality, and propose an alternative which uses a relaxed formulation of adversarial training.

Bio:

I am starting my second year as a PhD student at Bar Ilan University (Supervised by prof. Yoav Goldberg). I am interested in representation learning, analysis and interpretability of neural models, and the syntactic abilities of NNs. Specifically, I am interested in the way neural models learn distributed representations that encode structured information, in the way they utilize those representations to solve tasks, and in our ability to control their content and map them back to interpretable concepts. During my master's I have mainly worked on the ability of NNs to acquire syntax in typologically-diverse languages, and during my PhD so far I've been working on developing tools to remove information from neural representations in a controlled manner.

Claire Bowern: Linguistics and Voynichese

In this talk I give a brief overview of work that has been done so far on figuring out whether there is linguistic "signal" underlying the text of the Voynich Manuscript (Beinecke MS 408), an early 15th Century document which has so far been impossible to read. I discuss research which aims to tell language from non-language, outline some issues with doing research on Voynichese, and discuss some possibilities for future work.

Sabine Weber: Using the Distributional Inclusion Hypothesis for Unsupervised Entailment Detection

The task of Entailment Detection has received a lot of attention, for example as part of the GLUE benchmark. In recent work it is mostly treated as a sentence level task and is approached as a supervised learning problem using large language models and human annotated data. These approaches have known caveats like poor explainability and low inter-annotator agreement. Moreover, the need for human annotation makes it difficult to adapt existing models to new languages.

We present an approach that frames the task as a word-level problem and uses the distributional inclusion hypothesis to learn entailment relations from a large corpus of news text without human annotated data in English and German. We will give a detailed introduction to the distributional inclusion hypothesis and compare the advantages and disadvantages of our approach to popular language model based approaches.

Bio:

Sabine Weber is a fourth year PhD student at the University of Edinburgh working with Mark Steedman. She is currently finishing her thesis. She is specialising in entailment detection, multilingual applications, relation extraction and named entity typing and has a strong interest in explainability and AI ethics.

Khuyagbaatar Batsuren: Understanding and Exploiting Language Diversity: Building Linguistic Resources from Cognate to Morphology

Many multilingual lexical resources - BabelNet, ConceptNet, Universal Dependencies, UniMorph - have been developed for NLP and the Linguistic community in the past decade. These resources display language diversity in every description of linguistics, such as word, sense, syntax, and morphology. This talk has three parts: First, I will discuss the main idea of how language diversity can be understood and exploited to develop the linguistic resources themselves. The case study is to distinguish homonyms from polysemes. Second, I will talk about how we built CogNet, a large-scale, high-quality cognate database. Third, I will talk about how we built MorphyNet, a large multilingual morpheme database of derivational and inflectional morphology.

Bio:

Khuyagbaatar Batsuren is an associate professor at the National University of Mongolia. He received his Ph.D. in Computational Linguistics from the University of Trento in 2018 and continued a post-doc for one year. His research focuses on multilingual NLP, language diversity, cognate, lexical gaps, and morphology.

Aniello De Santo: Mathematical Linguistics and Typological Complexity

The complexity of linguistic patterns is object of extensive debate in research programs focused on probing the inherent structure of human language abilities. But in what sense is a linguistic phenomenon more complex than another, and what can complexity tell us about the connection between linguistic typology and human cognition? In this talk, I approach these questions from the particular perspective of formal language theory.

I will first broadly discuss how language theoretical characterizations allow us to focus on essential properties of linguistic patterns under study. I will emphasize how typological insights can help us refine existing mathematical characterizations, arguing for a two-way bridge between disciplines, and show how the theoretical predictions made by mathematical formalization of typological generalizations can be tested in Artificial Grammar Learning experiments.

In doing so, I aim to illustrate the relevance of mathematically grounded approaches to cognitive investigations into linguistic generalizations, and thus further fruitful cross-disciplinary collaborations.

Bio:

Aniello De Santo is an Assistant Professor in the Linguistics Department at the University of Utah.

Before joining Utah, he received a PhD in Linguistics from Stony Brook University. His research broadly lies at the intersection between computational, theoretical, and experimental linguistics. He is particularly interested in investigating how linguistic representations interact with general cognitive processes, with particular focus on sentence processing and learnability. In his past work, he has mostly made use of symbolic approaches grounded in formal language theory and rich grammar formalisms (Minimalist Grammars, Tree Adjoining Grammars).

Aleksandrs Berdicevskis: "Typology, will you marry sociolinguistics?" asks NLP

In this talk, I will:

— claim that in order to explain typology, we must understand language change;

— argue that understanding language change is inhibited by how difficult it is to quantitatively and systematically test relevant theories and hypotheses;

— describe how such quantitative tests can be carried out using very large annotated corpora of social media;

— show some very preliminary results from performing such tests on Swedish data.

Badr M. Abdullah: Capturing Cross-linguistic Similarity with Speech Representation Learning

The recent advances in speech representation learning have enabled the development of end-to-end neural models that are trained on actual acoustic realizations of spoken language. In addition to their successful applications in speech technology, these models offer a promising cognitive framework to model human speech processing. However, our current understanding of neural networks for speech is limited and their utility for cognitively motivated research requires further investigation. In this talk, I will present our ongoing research where we analyze the emergent representations in neural networks trained for speech processing tasks from a cross-linguistic perspective. Concretely, I will present two case studies: (1) an analysis of the emergent representations in a model of spoken language identification (SLID) for related languages, and (2) a cross-lingual representation similarity analysis (RSA) for models of spoken-word processing that simulate lexical access. I will show that the presented models capture cross-linguistic similarity in their representations in ways that largely correspond to our linguistic intuitions. However, traditional notions of cross-linguistic distance (e.g., phylogenetic and geographic distances) might not always be the best predictive factors of the (emergent) representational similarity. I will conclude by highlighting research challenges in this domain and outlining future research directions.

Badr is a 3rd year PhD student at Saarland University. His main research interest is to study the impact of linguistic experience on speech processing using computational models. Towards that end, he is currently developing a cognitively motivated analysis framework to investigate the extent to which neural networks for speech can predict the facilitatory effect of language similarity on cross-language speech processing tasks. In his free time, Badr enjoys reading, cooking, cycling, hiking, and doing nothing for the sake of doing nothing. To follow Badr on Twitter, find badr_nlp.

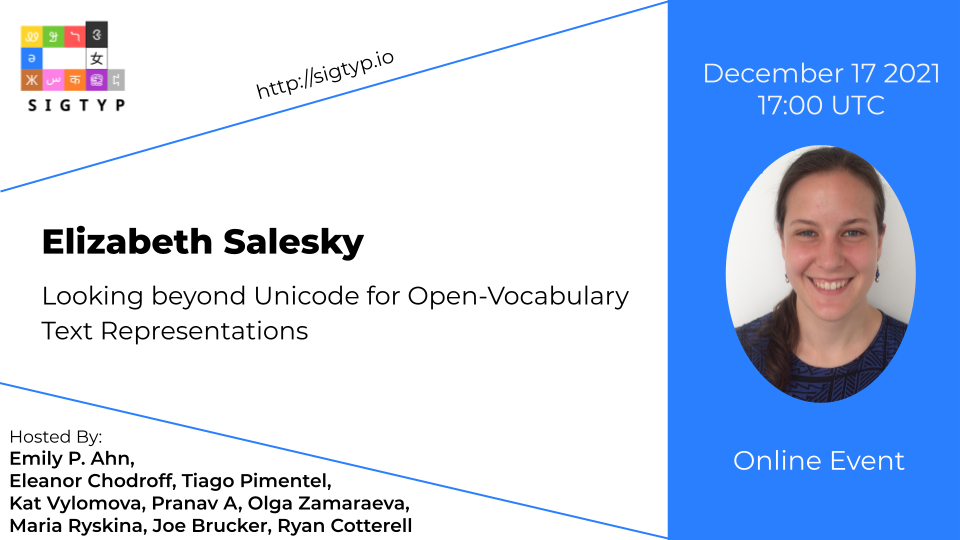

Elizabeth Salesky: Looking beyond Unicode for Open-Vocabulary Text Representations

Models of text typically have finite vocabularies, and commonly use subword segmentation techniques to achieve an 'open vocabulary.' This approach relies on consistent and correct underlying unicode sequences, and experiences degradation when presented with common types of noise, variation, and rare forms. In this talk, I will discuss an alternate approach in which we render text as images and learn open-vocabulary representations along with the downstream task, which in our work is machine translation. We show that models using visual text representations approach or match performance of traditional unicode-based models for several language pairs and scripts, with significantly greater robustness. I will also discuss several open questions and avenues for future work.

Elizabeth Salesky is a 3rd year PhD student at Johns Hopkins University. Generally, she is interested in machine translation and language representations, and how to make more data-efficient models which are robust to the variation observed across different languages and data sources.